Using the Strangler Pattern to Break Down Your E-Commerce Monolith

Monolithic platforms like Shopify, Oracle ATG, and BigCommerce are easy to get up and running. However, they lock you into various platform-specific limitations. As a result, you’re subject to each platform’s constraints and may feel stuck using one-size-fits-all software.

You face similar challenges if you’ve built your own e-commerce monolith. When more components of the application are intertwined, it is harder to modify features, scale components, or divide the work. After years of using one of these systems, technical debt builds up, and changes are hard to implement.

Microservices solve such problems. You can modularize your e-commerce business. Adapting quickly to the wealth of new opportunities to engage with your customers is a competitive advantage. That’s why modern tech companies like Netflix, Amazon, and Etsy switched to microservices.

Migrating from monolith to microservices provides many technical and business challenges. One approach that helps to replace your monolith is the strangler pattern. It does so one service at a time.

In this post, you’ll learn how to use the strangler pattern to break down each piece of your e-commerce monolith until you’ve replaced it with discrete, scalable microservices.

[toc-embed headline=”Why Microservices?”]

Why Microservices?

Microservices have several advantages over a monolithic approach:

Technical limitations

- One-size-fits-all platforms are fast and convenient when you get started, but as your business develops, issues start to arise.

- With microservices, you are free to implement the programming languages and third-party services that are best for each piece of your application. Because they’re scalable and modular, your decisions don’t tie you down.

Flexibility

- Microservices are independent and decoupled from your frontend. That means you can easily build new ways to reach your customers and wire them up to your services without having to reinvent the wheel each time.

- With your own services, you can ensure each service matches your needs, defining the data format and functionality. You can also control how user access is structured.

- Flexibility also prevents bottlenecks as your teams are not dependent on each other.

Security

- Attackers that gain access to your monolithic platform often gain access to everything – customer, product, and order data. Microservices can create silos between services, keeping them more secure.

Performance

- Scalability and the ability to focus resources on your more heavily trafficked services dynamically mean you can improve performance. For example, Best Buy improved its catalog API sync time from 24 hours to under 15 minutes.

[toc-embed headline=”What is the Strangler Pattern?”]

What is the Strangler Pattern?

The strangler pattern takes its name from the strangler fig tree. Like the tree, you use your existing application as a base. Then, you build a new service that replaces one specific element of it. When it’s done, you retire the old part of the application.

You continue this service by service until the new microservices replace your entire monolith. You ‘strangled’ the old code and thus can abandon it completely.

There are three phases in the strangler lifecycle:

- Transform the application by creating new versions of existing services.

- Co-exist with the old application running alongside an ever-increasing number of microservices.

- Eliminate the old when the new services completely replace the old system.

Strangler vs. waterfall approach

Besides the strangler pattern, the waterfall replacement pattern is the more common approach to migrating microservices. The waterfall pattern requires you to commit to a long development and deployment cycle, which increases the risk of bugs and lowers your velocity.

The waterfall method can take well over a year to deliver results, but you can make progress in bursts of six months or less by using the more agile strangler methodology. It naturally divides work into attainable targets, which means developers are motivated to complete tasks that deliver visible results.

With the strangler pattern, you can use each new microservice as soon as it is ready. There’s no need to build a completely new system. Some recommend rolling out changes monthly. For one, you can enjoy the advantages quickly. Secondly, it lets your developers move on to the next project. If issues occur, they can roll pieces of the system back more easily than the whole thing.

The strangler pattern is often the better option. It allows you to migrate each piece of your infrastructure step-by-step in a manageable, low-risk way and deliver business value faster.

The downside to the strangler pattern

There are some caveats. Migrating a complete application one step takes a while. Analyzing successful projects using the strangler pattern suggests remaining consistent and ensuring your management team commits to the long-term project.

Microservices benefit from development practices like continuous integration and deployment and having engineers that are familiar with them. Finding the right team is just as important as picking the right languages and frameworks. It’s important to ensure your developers are on board with the new processes. If they understand the advantages of the changes, buy-in will be higher.

One way to mitigate the risks of migrating to microservices is to use an established e-commerce platform like fabric. We’ve built fabric on microservices and can work with your team to ensure a smooth migration.

[toc-embed headline=”Using the Strangler Pattern in E-Commerce”]

Using the Strangler Pattern in E-Commerce

Let’s look at how to implement the strangler pattern. I use the monolith provided billing service feature and move it to a new microservice. Then, I’ll show you how to migrate important data and features without breaking your existing application.

Step 1: Decide where to start the migration

The service you choose to start with depends on your need and the platform you’re using. There are two common approaches.

- Critical features that you need to upgrade: If it’s impossible to update your legacy codebase or you’re facing performance issues that cost you customers (like a slow checkout experience), starting here might be best. Ensure everything is watertight before deploying. It takes more time but has more impact.

- Pick something less critical to test the process: It’s a great idea if your developers implement microservices for the first time as it’s less risky. However, it will drive less value for the business.

Let’s look at moving an e-commerce billing address service from a monolithic platform like Oracle ATG to a microservice hosted on AWS. Billing services that are slow or prone to errors might be preventing you from collecting revenue from customers. Thus, they’re often mission-critical in online stores.

I’ll only include a couple of attributes, but you can replicate this general pattern with whatever data you need.

Step 2: Build a middle layer

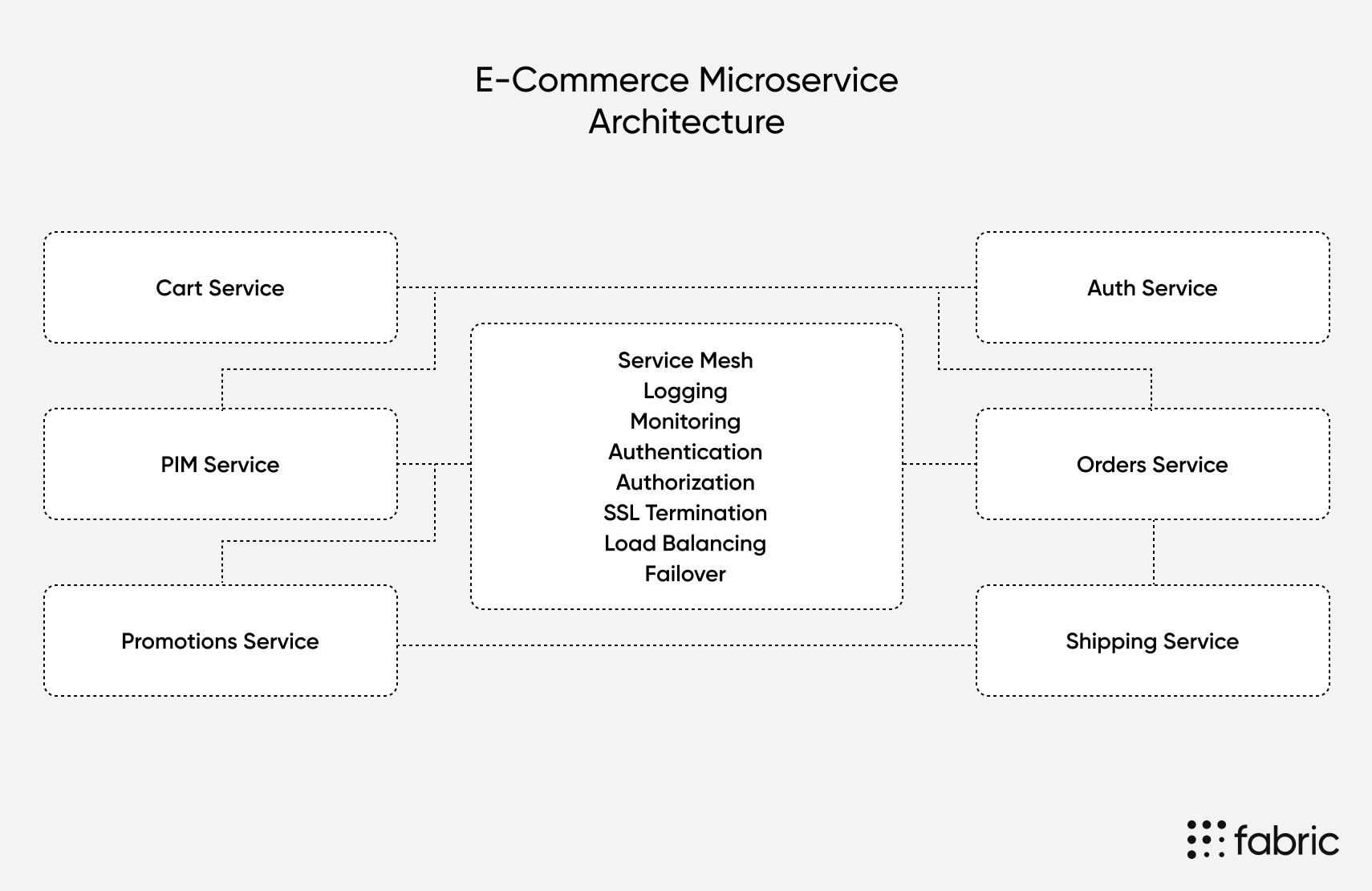

An API gateway can manage service calls and filter them to either your existing monolith or new services. AWS includes a guide on how to do it. A service mesh is also useful for load balancing and routing to manage data flow between your services.

With the middle layer in place, you can switch between your new and old services seamlessly. You could also theoretically A/B test the old and new path or roll back if something goes wrong during the migration.

Step 3: Build a new service and migrate data

Next, replace the old billing portion of your application with a new microservice. Here’s some code based on this example from Amazon’s AWS documentation that uses EC2 and Docker to deploy a billing microservice.

This task definition creates a new Docker container running on AWS to replace an Oracle ATG billing address service.

{

"containerDefinitions": [

{

"name": "[billing-address-service]",

"image": "[account-id].dkr.ecr.[region].amazonaws.com/[service-name]:[tag]",

"memoryReservation": "256",

"cpu": "256",

"essential": true,

"portMappings": [

{

"hostPort": "0",

"containerPort": "3000",

"protocol": "tcp"

}

]

}

],

"volumes": [],

"networkMode": "bridge",

"placementConstraints": [],

"family": "[billing-address-service]"

}

You’ll need to transfer data from your existing monolith’s database to your new microservice’s backend database in most cases.

For example, the Oracle ATG billing address service described above uses profile data. You can copy this from your Oracle application’s backend to AWS, as demonstrated below.

This sample code uses Amazon SQS to queue up a series of data transfer jobs. This is critical if you have lots of data to move. Because the process may take a long time, you don’t want a single failed transfer to break the whole process.

const { SQSClient, SendMessageCommand } = require("@aws-sdk/client-sqs");

const REGION = "us-west-1"; //replace with your region

const params = {

DelaySeconds: 12,

MessageAttributes: { // add other fields if you need them, remove what you don’t.

profileId: {

DataType: "Number",

StringValue: "543",

},

address: {

DataType: "String",

StringValue: "29 Acacia Road, Springfield",

},

},

MessageBody:

"Clothing Store Product Data.",

QueueUrl: "SQS_QUEUE_URL", // your queue URL

}

const sqs = new SQSClient({ region: REGION});

const run = async () => {

try {

const data = await sqs.send(new SendMessageCommand(params));

console.log("Success, product sent. MessageID:", data.MessageId);

} catch (err) {

console.log("Error", err);

}

};

run();Once you’ve queued up each row, you need a service to receive and process the data. It will add each row to your new database and delete messages from the queue as they are successfully processed.

import { SQSClient } from "@aws-sdk/client-sqs";

const REGION = "us-west-1"; //replace with your region

const sqsClient = new SQSClient({ region: REGION });

export { sqsClient };

import {

ReceiveMessageCommand,

DeleteMessageCommand,

} from "@aws-sdk/client-sqs";

import { sqsClient } from "./libs/sqsClient.js";

// Set the parameters

const queueURL = "SQS_QUEUE_URL"; //Your queue URL

const params = {

AttributeNames: ["SentTimestamp"],

MaxNumberOfMessages: 10,

MessageAttributeNames: ["All"],

QueueUrl: queueURL,

VisibilityTimeout: 20,

WaitTimeSeconds: 0,

};

const run = async () => {

try {

const data = await sqsClient.send(new ReceiveMessageCommand(params));

if (data.Messages) {

var deleteParams = {

QueueUrl: queueURL,

ReceiptHandle: data.Messages[0].ReceiptHandle,

};

try {

// TODO: Save your data here

database.save(data);

// Then delete the queued message

const data = await sqsClient.send(new DeleteMessageCommand(deleteParams));

console.log("Message deleted", data);

} catch (err) {

console.log("Error", err);

}

} else {

console.log("No messages to delete");

}

return data; // For unit tests.

} catch (err) {

console.log("Receive Error", err);

}

};

run(); Once your data is transferred into the new microservice and you’ve verified it is correct, you can begin testing the new service. If everything works correctly, you can retire part of your monolith and repeat the process with each part of your application.

Obviously, this migration process varies widely depending on the monolithic e-commerce platform you’re using. Still, hopefully, this gives you a sense of how you can apply the strangler pattern in your application.

[toc-embed headline=”Conclusion”]

Conclusion

The strangler pattern can help move away from your legacy software platform in a structured, low-risk way. It helps make meaningful improvements quickly without breaking your existing e-commerce store.

You can thus roll out new features and turn your platform into scalable, robust services that form the core of your e-commerce business. In addition, the decoupled nature of microservices lets you use them across multiple channels.

If you’d like to avoid doing a complete migration yourself, fabric can help. With industry-leading expertise in e-commerce microservices, they can help you migrate to their suite of e-commerce microservices using a secure, scalable method like the strangler pattern.

Tech advocate and writer @ fabric.