Building a Robust E-Commerce Service Mesh

Back in 2015, I was leading an engineering team developing a digital textbook rental platform. At the time, microservices were relatively new, but we decided that the flexibility and scalability made them a good choice for our platform.

Back then, adopting loosely coupled microservices was really challenging. The tooling wasn’t as good as it is today, there weren’t nearly as many people using microservices at startups, and many of the terms we use now hadn’t been coined yet.

Things have come a long way in the past six years, and one of the most exciting developments is the rise of third-party API-based platforms and service meshes. Now, instead of building all your microservices from the ground up, you have the option to integrate with pre-built services to add backend functionality to your application in an instant.

In this post, I’ll introduce the idea of a service mesh as a means of binding your internal and third-party services together. I’ll use a microservices-based e-commerce architecture for my examples, but many of the concepts introduced in this post will help you create better integrations between any application and the third-party services it relies on.

[toc-embed headline=”What Is a Service Mesh?”]

What Is a Service Mesh?

A service mesh describes a set of infrastructure components that allow microservices to communicate with one another. In contrast to an API gateway that sits in front of your public-facing HTTP layer, a service mesh connects the internal elements of your application and dictates how they can interact with one another.

A common use case for a service mesh is connecting containers in a Kubernetes deployment. Because containers are isolated from one another, the service mesh provides network rules that dictate which containers can pass data to others and how. Engineers often use sidecar deployments to accomplish this, but implementation details can vary.

Using a Service Mesh for Third-Party APIs

While typically used to marshal data between pieces of your core application, a service mesh is also useful for standardizing connections to integral third-party services.

“With the service mesh pattern, we are outsourcing the network management of any inbound or outbound request made by any service (not just the ones that we build but also third-party ones that we deploy) to an out-of-process application.”

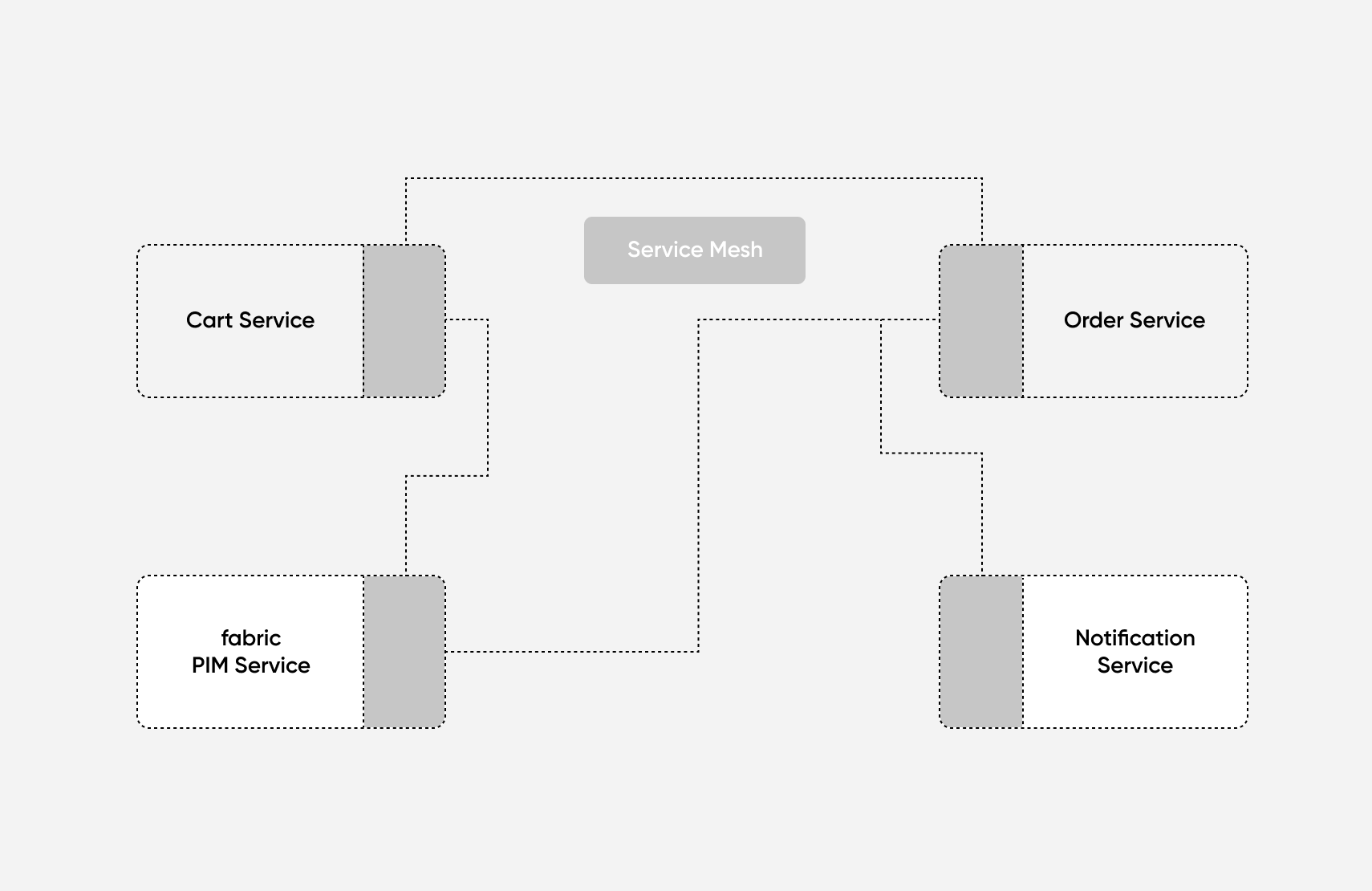

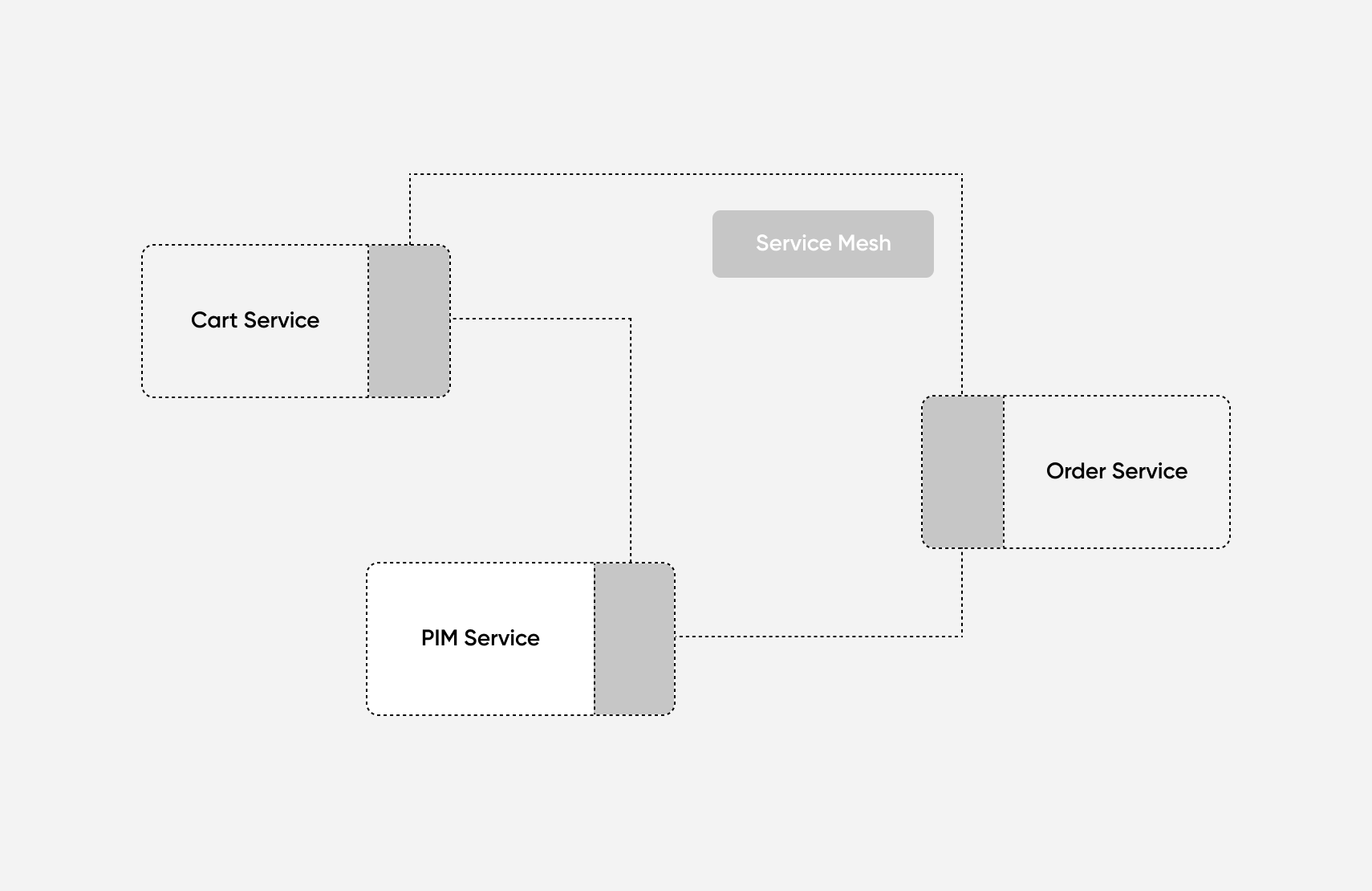

For example, let’s say you’re building an e-commerce application. You might start off with a Cart Service, Order Service, and a Product Information Management (PIM) Service:

As your application and product catalog scales, you might realize that it’s a better investment of your engineers’ time to focus on the user experience than building a PIM from scratch. So, you decide to integrate with a third-party PIM like fabric.

Next, you realize that you need to deliver multichannel notifications to your users, so you integrate with another third-party tool like Courier or Nexmo. You can use your service mesh to proxy calls to these third-party services and control the flow of data to your existing internal services.

The service mesh pattern ensures that communication between your internal and external services happens seamlessly, and allows you to more easily swap between in-house and third-party solutions as your application evolves. Because the service mesh acts as an interface between your services, you shouldn’t have to refactor all your services every time you update one.

The Functions of a Service Mesh

While the most universal purpose of a service mesh is to connect services across a network, it isn’t limited to passively transporting data. Because your service mesh touches all requests between services, it sits in a perfect spot to handle several key application functions:

- Logging and monitoring: Tracing and debugging in microservices is really challenging, but having a standardized service mesh can help.

- Security: Because the service mesh passes requests between services, it can enforce rules about which data can be passed into or out of each other service. It might also handle authentication between services.

- Encryption: Any communication over a network should be encrypted, and typically, a service mesh can facilitate this by administering keys and certificates for each service.

- Load balancing: If you have multiple instances of each service, your service mesh could be responsible for distributing traffic between them.

- Failover and rate-limiting: If one of your services goes down, your service mesh will be in an ideal spot to tell you about it. It might also help by rate-limiting requests to prevent you from accidentally DDoSing yourself.

[toc-embed headline=”Building an E-Commerce Service Mesh”]

Building an E-Commerce Service Mesh

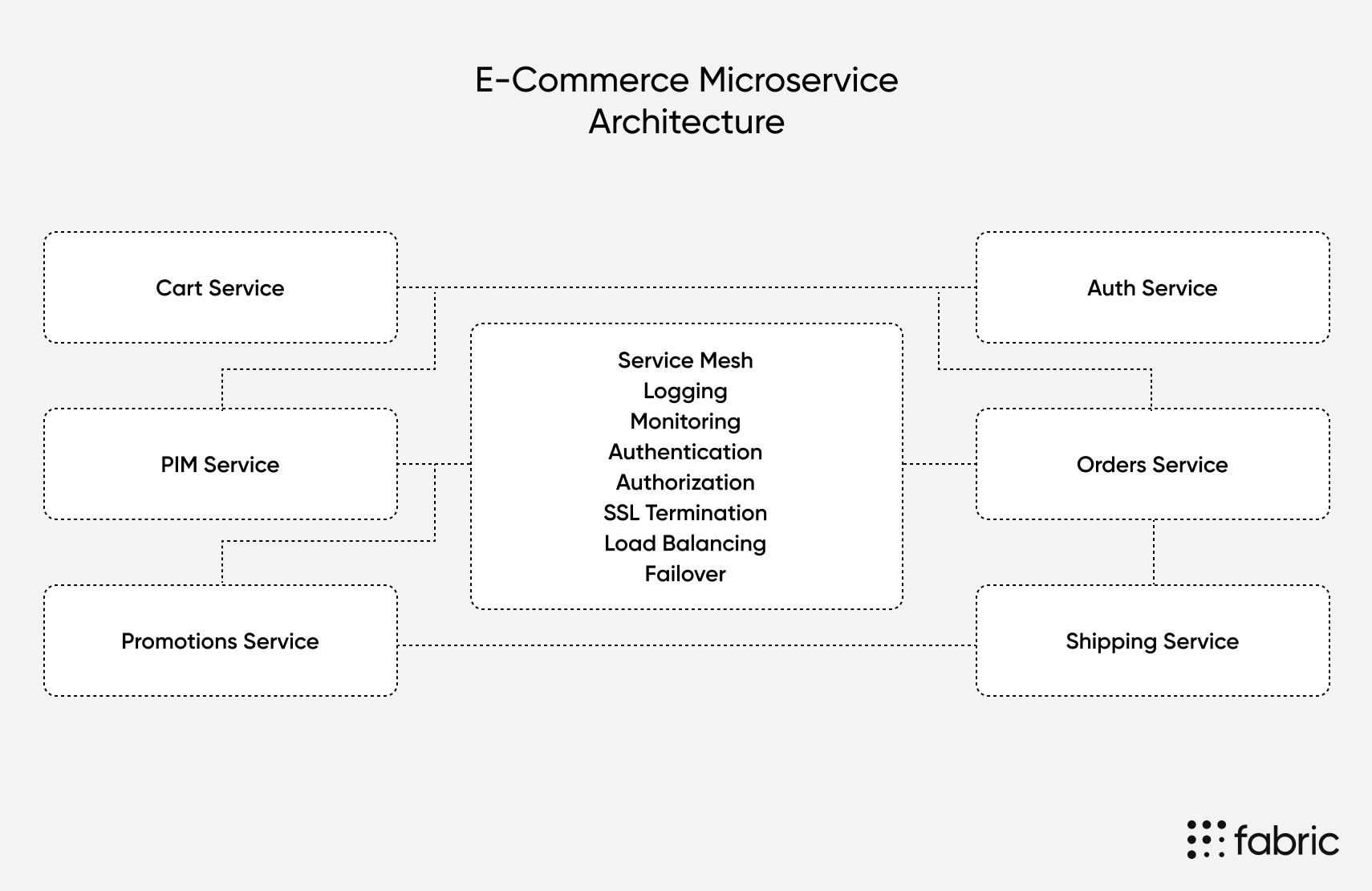

While the above functions apply generally to any service mesh, there are some specific considerations you should make if you’re building a service mesh for an e-commerce application. First, let’s take a look at how a service mesh might connect the various microservices an e-commerce application is likely to use:

Your e-commerce service mesh will be responsible for making sure all your various APIs are able to connect and stay active. For example, the service mesh will handle encryption between your Auth Service and your Order Service to keep user data safe. It will ensure that your PIM scales up or incorporates load balancing to handle failures. And it will log errors when an item without a price (from the Promotions Service) is added to an order (via the Orders Service).

To start building a service mesh for your e-commerce application:

- Start breaking down your monolithic application. You can do this quickly by adopting third-party e-commerce microservices instead of building, managing, and updating them yourself. (We have a suite of e-commerce microservices at fabric that you can connect with any other service.)

- Find an open source or third-party service mesh. Consul, Istio, and Kuma are good options if you’re using container-based microservices. You can view a list and comparison of other reliable service meshes here.

- Configure your service mesh and supporting tools. You’ll need to decide how to collect logs, install SSL certificates, and how you’ll load balance between each instance of your services. Typically this means spinning up new containers (or sidecars) and data volumes in your Kubernetes cluster.

- Understand the technical considerations below. These will help you choose the right service mesh and configuration based on your needs.

[toc-embed headline=”Technical Considerations for Building a Service Mesh”]

Technical Considerations for Building a Service Mesh

Whether you use an off-the-shelf service mesh or you build your own microservice integration layer, there are a few things that are critical to consider. In this section, I’ll review some of the most pertinent challenges.

Speed

Every step in a networked request adds latency to the total time it takes to receive data from your application. Many of the biggest challenges in using microservices are related to the speed of network requests, and because speed is so important to e-commerce applications, your service mesh needs to be prepared for this.

Unfortunately, it’s not easy to control network requests, especially when you rely on third-party solutions.

“It’s important for developers to understand the limitations of a third-party service before relying on them at scale. Can they keep up with your expected demand while maintaining the performance you require? Is their stated SLA compatible with yours?”

There are a few ways you can mitigate speed issues using a service mesh, though:

- Caching: By caching or pre-fetching data from key services, your service mesh can skip common network requests. For example, if you’re using a third-party PIM, you might also build a cache of your most commonly requested products so they can be served as quickly as possible.

- Failover: If a service gets flooded and starts to slow down or fails completely, it’s helpful to have a backup. You can set up your service mesh to failover to a mirrored instance in another region for critical microservices.

- Throttling and asynchronous requests: If you can’t easily failover to another instance of your service, you can throttle requests to allow it to catch up. This works especially well for asynchronous calls.

- Auto-scaling: Typically used in internal services, some vendors also allow you to scale up your plan programmatically. Your service mesh can be in charge of raising or lowering your capacity depending on load.

- Select reliable vendors: If you’re worried about latency due to third-party APIs, make sure you carefully evaluate each vendor.

Security and Privacy

Improved security almost always comes at the cost of speed, but depending on the data moving across your service, it might be worth the tradeoff. Giving your service mesh responsibility for authenticating and authorizing requests between your services allows you to centralize your security rules and makes keeping them up to date much easier.

“The softest target in most organizations is the app layer, and attackers know this. Microservices thus both make this problem harder and easier for the defenders. Harder, because as apps are decomposed, the amount of network interdependencies grows and most dev teams themselves don’t fully understand the connectivity mesh of their apps.”

Security between microservices is part of the challenge, but the other part is data privacy across services. For example, some countries impose data storage laws that don’t allow identifying information to leave their country. If you’re operating a distributed e-commerce application with customers around the world, it’s important that your service mesh can handle data localization requirements.

Observability

A significant obstacle we faced when implementing microservices in 2015 was tracing and understanding errors, and we weren’t alone.

“One of the biggest challenges that we hear from users is how difficult it is to capture metrics and data from their microservices… This is why most organizations in the ‘evaluation’ stages of adopting microservices are unsure when they will be able to deploy production applications with this type of architecture.”

Often, users complained about cryptic error messages, and our engineers struggled to figure out where in the request’s lifecycle our application failed. By implementing observability, logging, and error handling at the service mesh layer, you can have a much clearer picture of what’s going on in your microservices.

This is something you’ll also have to consider when integrating with third-party APIs. If they provide clear HTTP error codes and messages, your service mesh can interpret these failures and pass errors up the chain appropriately. If not, you’ll have a really hard time understanding what went wrong.

[toc-embed headline=”Conclusion”]

Conclusion

Whether you’re building microservices or integrating data from third-party e-commerce APIs, you’ll need to think about how data moves across the network. Too many teams treat this as an afterthought by building independent services and allowing them to communicate directly, but by adding a service mesh, you can abstract these connections away. This allows you to build a more performant, secure, and scalable distributed application.

Building a service mesh yourself is a big undertaking, so if you’re using container-based microservices check out Consul, Istio, and Kuma. Finally, if you’re looking to integrate a powerful headless e-commerce platform into your microservices-based architecture, take a look at Fabric. Our team can help you build a robust backend for your online store or mobile application without spending months of engineering time.

Tech advocate and writer @ fabric. Previously CTO @ Graide Network.